JUPITER Technical Overview

A Deep Dive Into JUPITERs Building Blocks

JUPITER, the “Joint Undertaking Pioneer for Innovative and Transformative Exascale Research", will be the first exascale supercomputer in Europe. The system is provided by a ParTec-Eviden supercomputer consortium and was procured by EuroHPC JU in cooperation with the Jülich Supercomputing Centre (JSC). It will be installed in 2024 at the Forschungszentrum Jülich campus in Germany.

The purpose of this article is to give background information and provide more technical details of the chosen architectures of the different components. Given that the system installation hast not yet started, information is subject to change and might be modified at any point in time.

JUPITER at a Glance

JUPITER will be the first European supercomputer of the Exascale class and will be the system with highest compute performance procured by EuroHPC JU,which includes multiple Petascale and Pre-Exascale systems throughout Europe. The total budget of 500 million Euro includes acquisition and operational costs, the installation will start in 2024.

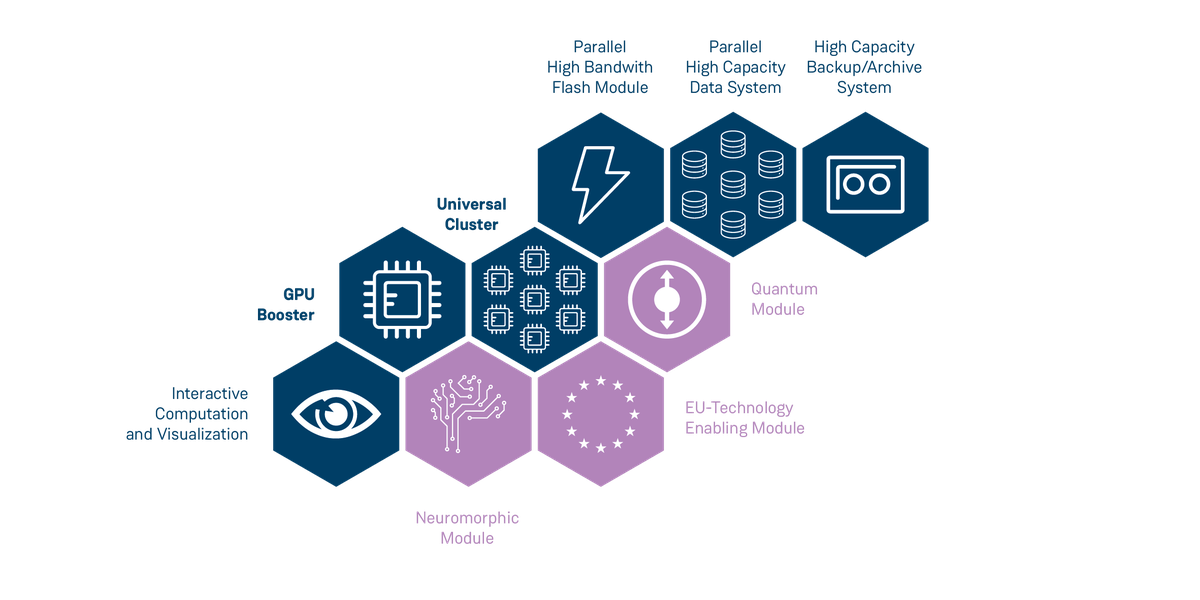

Following the dynamic Modular System Architecture (dMSA) history implemented by JSC and other European partners in the course of the DEEP research projects, the JUPITER system will consist of two compute modules, a Booster and a Cluster Module. The Booster Module will deliver 1 ExaFLOP/s FP64 performance, measured through the HPL benchmark. It implements a highly-scalable system architecture based on the latest generation of NVIDIA GPUs in the Grace-Hopper superchip form factor. The general-purpose Cluster Module targets workflows that do not benefit from accelerator-based computing. It utilizes the first European HPC Processor, Rhea by SiPearl, to provide a uniquely high memory bandwidth supporting mixed workloads. Both modules will be deployed independently.

In addition, a 21 Petabyte Flash Module (ExaFLASH) is provided based on the IBM Storage Scale software and a corresponding storage appliance based on IBM ESS 3500 building blocks. Information about the high-capacity Storage Module (ExaSTORE) as well as backup and archive will follow in the next months after their corresponding procurements finished.

All compute nodes of JUPITER as well as storage and service systems are connected to a large NVIDIA Mellanox InfiniBand NDR fabric implementing a DragonFly+ topology.

The aforementioned system configuration is the result of a public procurement that took prior JSC systems like JUWELS, the Jülich Wizard on European Leadership Science, as a blueprint. The current JUWELS and JSC userbase played a key role when defining requirements, utilizing a large set of benchmarks and applications for the assessment of the offers.

JUPITER Booster

The Booster module of JUPITER (short: the Booster) will feature roughly 6000 compute nodes to achieve the compute performance of 1 ExaFLOP/s (FP64, HPL) – and much more in lower precision computing (for example more than 70 ExaFLOP/s of theoretical 8-bit compute performance with sparsity). The driving chip of the system is the NVIDIA Hopper GPU, the latest generation of NVIDIA’s HPC-targeted general-purpose GPU line. The GPUs are deployed in the Grace-Hopper superchip form factor (GH200), a novel tight combination between NVIDIA’s first CPU (Grace) and latest-generation GPU (Hopper).

Each Booster node features four GH200 superchips, i.e. four GPUs each closely attached to a partner CPU (via NVLink Chip-to-Chip). With 72 cores per Grace CPU, a node has a total of 288 CPU cores (Arm). In a node, all GPUs are connected via NVLink 4, all CPUs are connected via CPU NVLink connections.

The Hopper H100 GPU variant installed into the system offers 96 GB of HBM3 memory, accessible with 4 TB/s bandwidth from the multiprocessors of the GPU. Compared to previous NVIDIA GPU generations, H100 offers more multiprocessors, larger caches, new core architectures, and further advancements – the documentation released by NVIDIA gives overviews. Using NVLink4, one GPU can transmit data to any other GPU in a node with 150 GB/s per direction.

Each GPU is attached to a Grace CPU, NVIDIA’s first HPC CPU, utilizing the Arm instruction set. The Grace CPU has 72 Neoverse V2 cores, SVE2-enabled with four 128 bit functional units, each. The CPU can access 120 GB of the LPDDR5X memory with a bandwidth of 500 GB/s. The key feature of the superchip design is the tight integration between CPU and GPU, not only offering a high bandwidth (450 GB/s per direction), but also more homogeneous programming. Again, details about Grace and properties making the combination a superchip can be found in NVIDIA documentation.

A CPU is connected to the three neighboring CPUs in a node via dedicated CPU NVLink (cNVLink) connections, offering 100 GB/s bi-directional bandwidth. A further PCIe Gen 5 connection exists per CPU towards its associated InfiniBand adapter (HCA). Four latest-generation InfiniBand NDR HCAs are available in a node, each with 200 Gbit/s bandwidth.

The system is warm-water-cooled, using the BullSequana XH3000 blade and rack design.

JUPITER Cluster

The Cluster module will feature more than 1300 nodes to achieve a performance of more than 5 PetaFLOP/s (FP64, HPL). The silicon powering the Cluster is the Rhea1 processor, a processor designed in Europe through the EPI projects and commercialized by SiPearl. Rhea – like Grace – utilizes the Arm instruction set architecture (ISA), with the unique feature of providing an extraordinary high memory bandwidth by using 64 GB HBM2e memory.

Each Cluster node features two Rhea1 processors, each containing 80 Arm Neoverse Zeus cores and providing scalable vector engines (SVE) for enhanced performance. Beyond 2×64 GB HBM memory, each node provides additional 512 GB of DDR5 main memory. Dedicated nodes with 1 TB of main memory will be available as well.

The nodes are again based on the BullSequana XH3000 architecture and will be integrated into the global NVIDIA Mellanox InfiniBand interconnect with one NDR200 link per node.

JUPITER High-Speed Interconnect

At the core of the system, the InfiniBand NDR network connects 25 DragonFly+ groups in the Booster module, as well as 2 extra groups in total for the Cluster module, storage, and administrative infrastructure. The network is fully connected, with more than 11000 400 Gb/s global links connecting all groups with each other.

Inside each group connectivity is maximized, with a full fat-tree topology. In it, leaf and spine switches use dense 400 Gb/s links; leaf switches rely on split ports to connect to 4 HCAs per node on the Booster module (1 HCA per node on the Cluster module), each with 200 Gb/s.

In total, the network comprises almost 51000 links and 102000 logical ports, with 25400 end points and 867 high radix switches, and has still spare ports for future expansions, for example for further computing modules.

The network has been designed with HPC and AI use-cases in mind. Its adaptive routing and advanced in-network computing capabilities enable a very well-balanced, scalable, and cost-effective fabric for ground-breaking science.

ExaFLASH and ExaSTORE

JUPITER will provide access to multiple storage systems. As part of the JUPITER contract, a storage system targeting 21 PB of useable high-bandwidth and low-latency flash storage will be provided as scratch storage.

The scratch storage is based on 20 IBM Storage Scale 6000 systems utilizing NVMe disk technology, based on the IBM Storage Scale solution. With 29 PB of raw and 21 PB of useable capacity, it targets to provide more than 2 TB/s write and 3TB/s read performance.

In addition, a high-capacity Storage Module with more than 300 PB of raw capacity as well as a tape infrastructure for backup and archive purposes with over 700 PB capacity will be provided. The systems will be directly connected to JUPITER but are part of independent procurements. Dedicated servers for data movements between the individual storage systems will be available.

Service Partition and System Management

The installation and operation of JUPITER will be done by the unique JUPITER Management Stack. This is a combination of xScale (Atos/Eviden), ParaStation Modulo (ParTec), and software components from JSC.

Slurm is used for workload and resource management,extended with ParaStation components. The backbone of the background management stack is a Kubernetes environment, relying on a highly-available Ceph storage. The management stack will be used to install and manage all hardware and software components of the system.

More than 20 login nodes will provide SSH access to the different modules of the system. In addition, the system will be integrated into the Jupyter environment at JSC and will be made available via UNICORE.