Artificial Intelligence-Driven Detection of Pushing Behavior in Human Crowds

In this research, a novel automatic AI-based framework has been developed to detect pushing behavior in human crowds, specifically in video recordings and live camera streams of crowded event entrances. The primary goal is to provide organizers and security teams with the necessary knowledge to alleviate pushing behavior and its associated risks, enhancing crowd comfort and preventing potential life-threatening situations. The framework consists of three phases:

First: Identifying pushing regions in video recordings of crowds

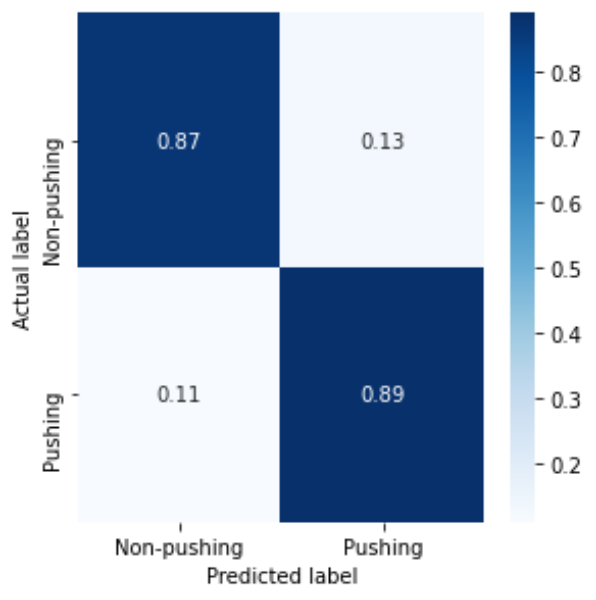

In this phase, we developed a new methodology utilizing a pre-trained deep learning optical flow model, an adapted and trained EfficientNetV1B0-based Convolutional Neural Network (CNN), and a false reduction algorithm. This approach aims to identify regions in crowd videos where individuals are engaging in pushing. Each identified region corresponds to an area between 1 and 2 square meters on the ground. By identifying these pushing regions, we can gain a better understanding of the timing and location of such behavior. This knowledge is essential for developing effective crowd management strategies and improving the design of public spaces.

The approach has been trained and evaluated using several real-world experiments, each simulating a straight entrance with a single gate. Experimental results show that the proposed approach achieved an accuracy and F1 score of 88%.

Second phase: Real-time detecting pushing region in live camera streams of dense crowds

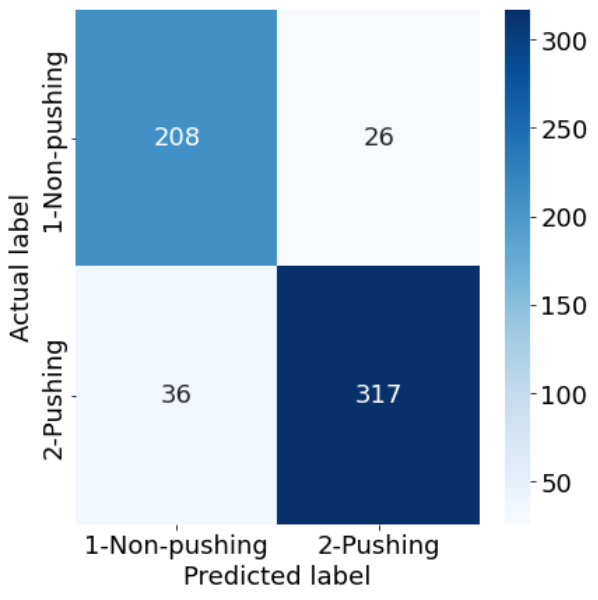

In the second phase, we proposed a new cloud-based deep learning approach to identify pushing regions within dense crowds at an early stage. Automatic and timely identification of such behavior would enable organizers and security forces to intervene before situations become uncomfortable. Furthermore, this early detection can help assess the efficiency of implemented plans and strategies, allowing for identification of vulnerabilities and potential areas of improvement.

This approach combines a robust, fast, and pre-trained deep optical flow model, an adapted and trained EficientNetV2B0-based CNN model, and a color wheel method to accurately analyze the video streams and detect pushing patches. Additionally, it leverages live capturing technology and a cloud environment to provide powerful computational resources, enabling the real-time collection of crowd video streams and the delivery of early-stage results.

Several real-world experiments, simulating both straight and 90° corner crowded event entrances with one and two gates, were used to train and evaluate the cloud-based approach. The experimental findings indicate that this approach detected pushing regions from the live camera stream of crowded event entrances with an 87% accuracy rate and within a reasonable time delay.

Third phase: Annotating individuals engaged in pushing within crowds

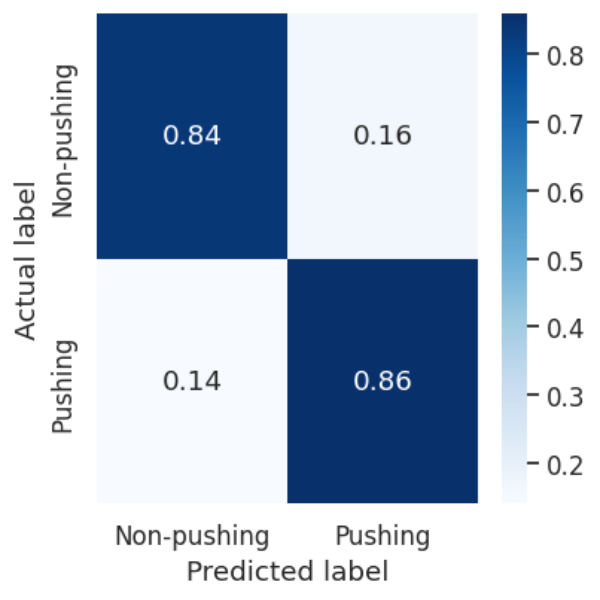

While the first two phases focus on region-based detection, the third phase introduced a novel approach to identify individuals engaged in pushing within crowd videos. By analyzing the dynamics of pushing behavior at the microscopic level, we can gain more precise insights into crowd behavior and interactions.

The presented approach extends the previous models by laveraging the Voronoi Diagram. Additionally, it uses pedestrian trajectory data as an auxiliary input source. Similar to the second phase but with more real-world experiments, this approach was trained and evaluated. The experimental findings demonstrate that the approach achieved an accuracy of 85%.

Articles

Ahmed Alia, Mohammed Maree, and Mohcine Chraibi. “A Hybrid Deep Learning and Visualization Framework for Pushing Behavior Detection in Pedestrian Dynamics.” Sensors 22, no. 11 (2022): 4040. https://doi.org/10.3390/s22114040. https://github.com/PedestrianDynamics/DL4PuDe

Ahmed Alia, Mohammed Maree, Mohcine Chraibi, Anas Toma, and Armin Seyfried. “A Cloud-based Deep Learning Framework for Early Detection of Pushing at Crowded Event Entrances.” IEEE Access (2023). https: //doi.org/10.1109/ACCESS.2023.3273770. https://github.com/PedestrianDynamics/CloudFast-DL4PuDe

Ahmed Alia, Mohammed Maree, Mohcine Chraibi, and Armin Seyfried. “A Novel Voronoi-based Convolutional Neural Network Framework for Pushing Person Detection in Crowd Videos.” Complex & Intelligent Systems (2024). https://doi.org/10.1007/s40747-024-01422-2. https://github.com/PedestrianDynamics/VCNN4PuDe